- Harsh Maur

- January 25, 2025

- 8 Mins read

- Scraping

An Ultimate Guide to JavaScript Web Scraping in 2025

Want to scrape data from websites like a pro in 2025?, Javascript will be the best choice for handling modern and dynamic websites. This guide will help you to need to know about web scraping with JavaScript, from tools to methods, while staying ethical and efficient.

This guide simplifies AI web scraping with step-by-step examples and practical tips. Whether you're new or experienced, it helps you build efficient scrapers while staying ethical and compliant.

Can JavaScript be used for web scraping?

Yes, you can! with Javascript, specially in combination with Node.js. tools like Puppeteer, Selenium, Axios and cheerio are used to scrape data from websites easily. Its good for both dynamic and static sites what alter content using Javascript.

Is Python or JavaScript better for web scraping?

Python and JavaScript both offer substantial advantages for web scraping. Python is well-suited for fast development, data parsing, and large-scale extraction, while JavaScript excels with browser APIs and scraping Single-Page Applications (SPAs). The best choice depends on your needs.

Python and Javascript both have it’s advantages for web scraping. Javascript is good with browser APIs and scraping single-page Applications while Python is better for fast development , large scale extraction. The best choice depends on your requirements.

Setting Up Your JavaScript Web Scraping Environment

Setting up the right environment is essential to make the most of JavaScript's strengths for web scraping. Here's a breakdown of the tools, libraries, and steps you'll need to get started.

Tools and Libraries for Web Scraping

JavaScript web scraping often involves these main tools:

- Node.js: A runtime environment for running JavaScript outside the browser.

- npm: A package manager to install and manage dependencies.

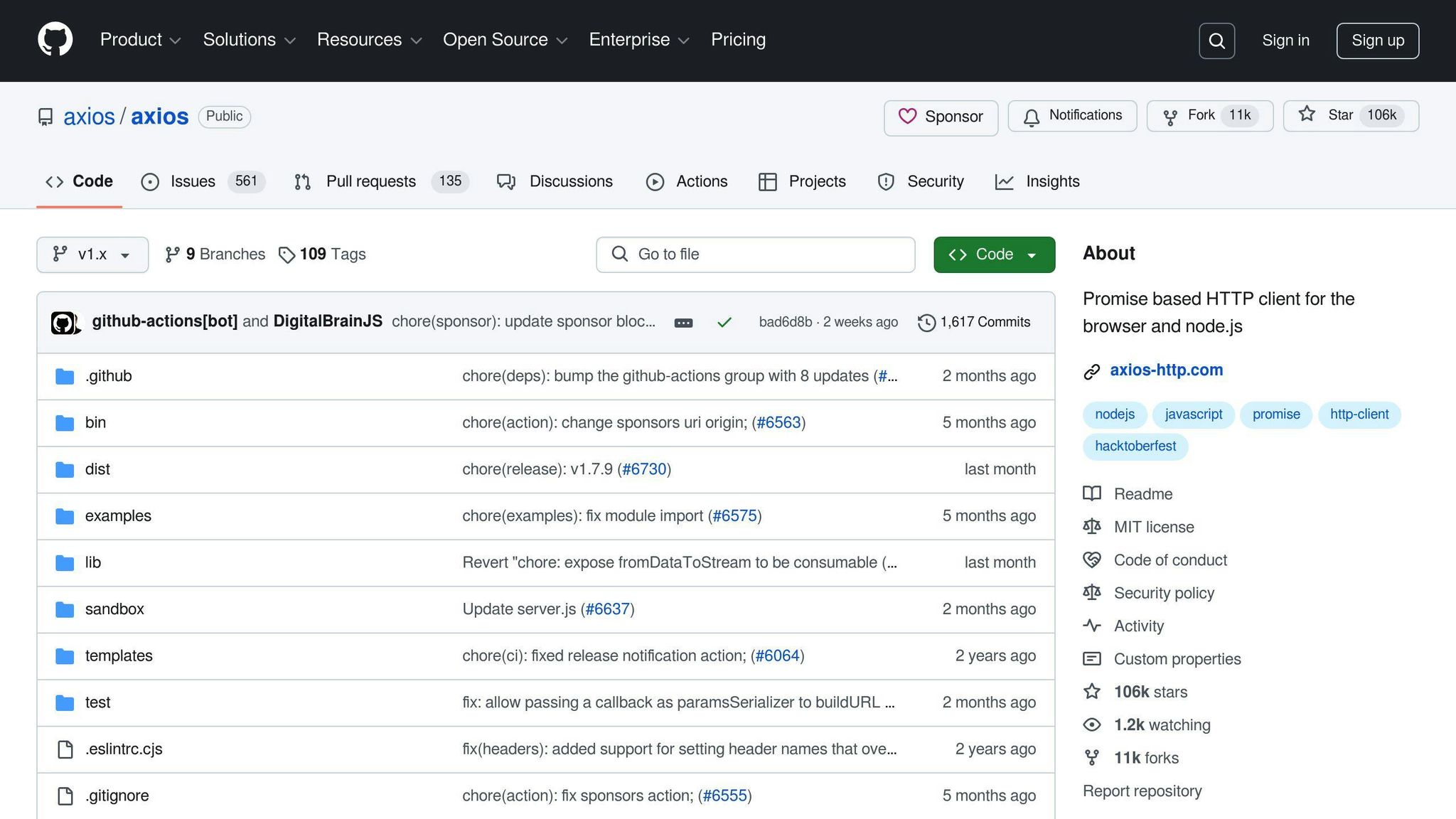

- Axios: Used for making HTTP requests.

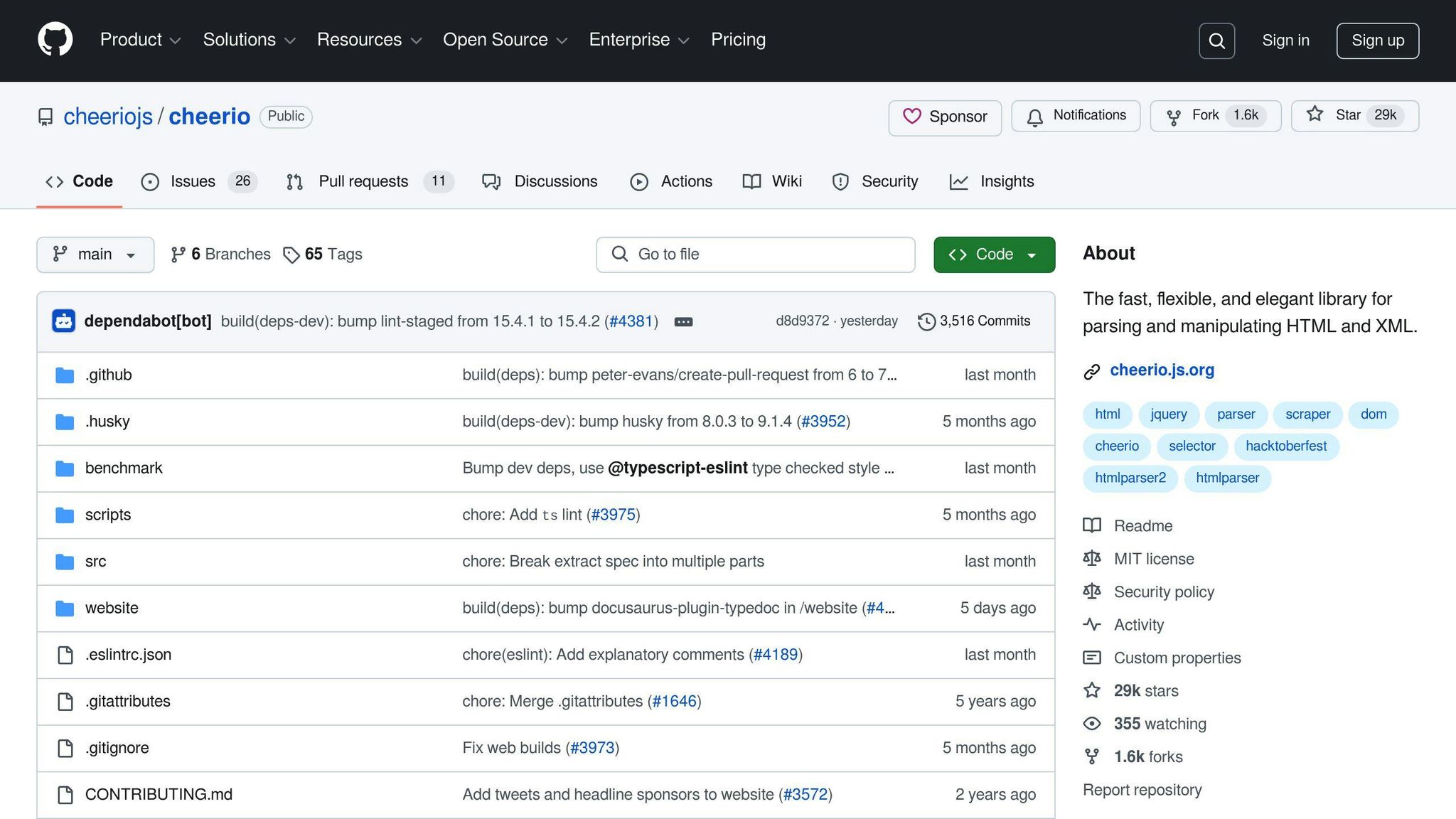

- Cheerio: A library for parsing and working with HTML.

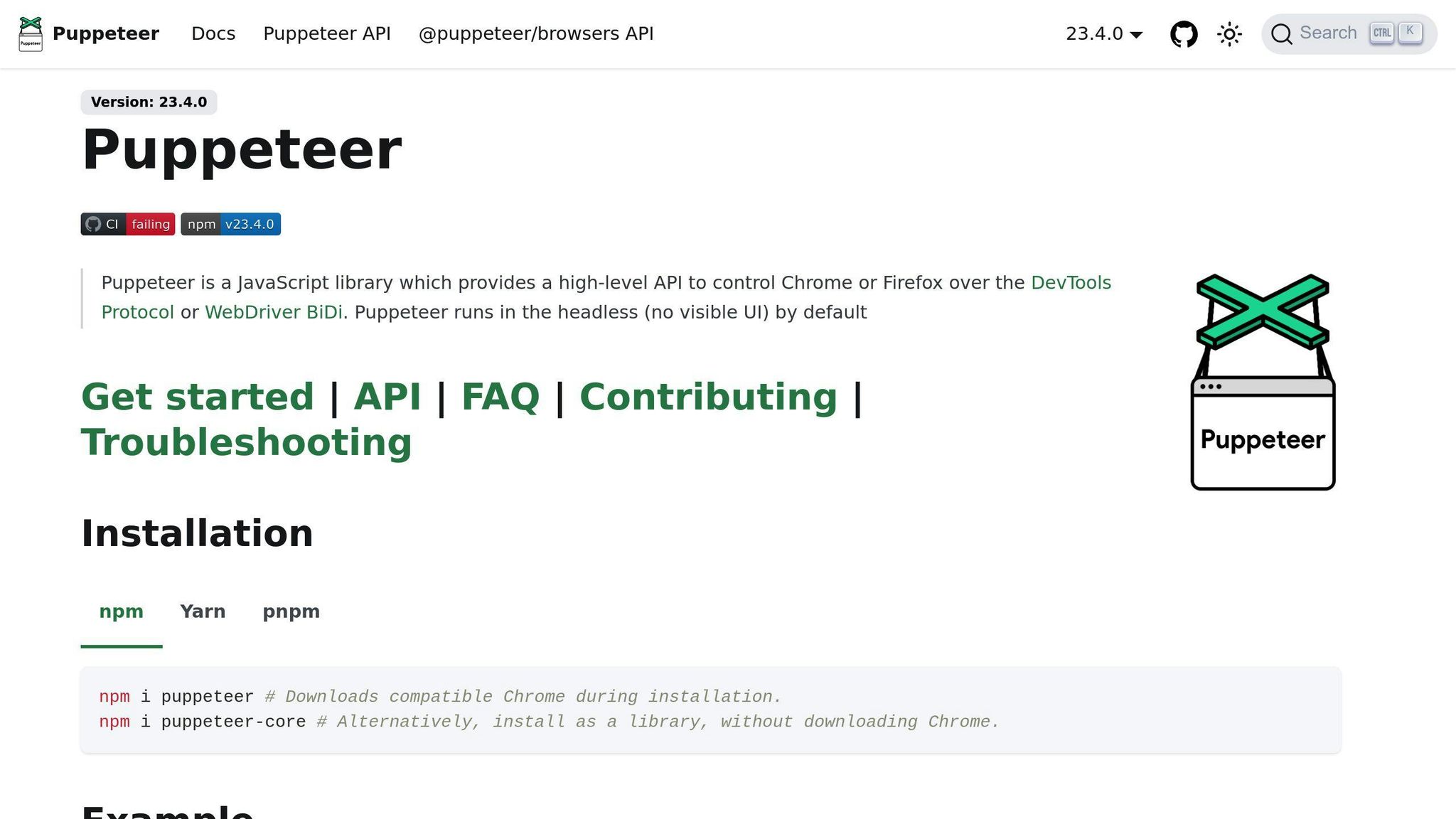

- Puppeteer: A tool for automating and controlling a headless browser.

| Library | Primary Use Case | Best For |

|---|---|---|

| Axios | HTTP Requests | Fetching data from static pages |

| Cheerio | HTML Parsing | Simple DOM manipulation |

| Puppeteer | Browser Automation | Scraping dynamic content |

Installing Dependencies

Start by initializing your project:

mkdir scraper && cd scraper && npm init -y

Next, install the required libraries:

npm install axios cheerio puppeteer

Organizing Your Project

A clear project structure makes your code easier to manage and scale. Here's a suggested layout:

-

config/: Stores configuration files and environment variables. -

src/scrapers/: Contains modules for specific scraping tasks. -

src/utils/: Includes helper functions and reusable utilities. -

data/output/: Holds the scraped data and results.

This setup ensures everything is organized and easy to navigate, which will be especially helpful as you apply the techniques discussed in later sections.

Basic Techniques for JavaScript Web Scraping

JavaScript web scraping often involves three key libraries that tackle different parts of the process. Here's how to use each one effectively for various scraping tasks.

Using Axios for HTTP Requests

Axios is a great choice for handling HTTP requests and fits neatly into a project's utils/ directory. Here's an example of how to fetch a webpage:

const axios = require('axios');

async function fetchWebPage(url) {

const response = await axios.get(url, {

headers: {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36',

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9'

}

});

return response.data;

}

You can customize this further with additional headers, timeout settings, or retry logic to handle more complex scenarios.

Extracting Data with Cheerio

Cheerio makes it easy to extract data by using a selector-based approach, similar to jQuery. Here's an example:

const cheerio = require('cheerio');

function extractProductData(html) {

const $ = cheerio.load(html);

// Extracting basic details

const data = {

title: $('.product-title').text().trim(),

price: $('.price-value').text().trim(),

description: $('.product-description').text().trim()

};

// Getting nested information

const imageUrls = $('.product-gallery img').map((_, img) => $(img).attr('src')).get();

const metadata = $('.product-meta').data('product-info');

return { ...data, imageUrls, metadata };

}

Cheerio works well for static websites, but for dynamic content, you'll need a tool like Puppeteer.

Scraping Dynamic Content with Puppeteer

Puppeteer enables browser automation, making it ideal for scraping content that requires JavaScript execution. Here's how to scrape a dynamic webpage:

const puppeteer = require('puppeteer');

async function scrapeDynamicPage(url) {

const browser = await puppeteer.launch();

const page = await browser.newPage();

await page.goto(url, {waitUntil: 'networkidle0'});

await page.waitForSelector('.dynamic-content');

const data = await page.evaluate(() => {

return {

title: document.querySelector('.title').innerText,

items: Array.from(document.querySelectorAll('.item'))

.map(item => item.textContent)

};

});

await browser.close();

return data;

}

You can also enhance Puppeteer's capabilities with these techniques:

// Click a "Load More" button and wait for new content

await page.click('.load-more-button');

await page.waitForSelector('.new-content');

// Simulate infinite scrolling

await page.evaluate(() => {

window.scrollTo(0, document.body.scrollHeight);

});

await page.waitForTimeout(1000);

These methods are especially useful for dealing with dynamic pages and pave the way for more advanced pagination techniques discussed later.

sbb-itb-65bdb53

Advanced Web Scraping Strategies with JavaScript

Handling Pagination and Infinite Scrolling

Many modern websites use pagination or infinite scrolling to display large datasets. Here's how you can handle infinite scrolling effectively with Puppeteer:

async function scrapeInfiniteScroll(url, scrollTimes = 5) {

const browser = await puppeteer.launch();

const page = await browser.newPage();

await page.goto(url);

let previousHeight = 0;

for (let i = 0; i < scrollTimes; i++) {

const currentHeight = await page.evaluate('document.body.scrollHeight');

if (currentHeight === previousHeight) {

break;

}

await page.evaluate('window.scrollTo(0, document.body.scrollHeight)');

await page.waitForTimeout(2000);

previousHeight = currentHeight;

}

const data = await page.evaluate(() => {

return Array.from(document.querySelectorAll('.item')).map(item => ({

title: item.querySelector('.title')?.textContent,

description: item.querySelector('.description')?.textContent

}));

});

await browser.close();

return data;

}

This approach leverages Puppeteer's ability to handle dynamic content and ensures you can extract data from pages that load content as you scroll.

Managing Authentication and Sessions

Accessing protected data often requires maintaining a session. Here's how you can handle authentication using Axios:

const axios = require('axios');

async function authenticatedScraping(loginUrl, targetUrl, credentials) {

const axiosInstance = axios.create({

withCredentials: true,

maxRedirects: 5,

headers: {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) Chrome/91.0.4472.124'

}

});

await axiosInstance.post(loginUrl, {

username: credentials.username,

password: credentials.password

});

const response = await axiosInstance.get(targetUrl);

return response.data;

}

This method ensures your scraper can log in and maintain access to pages that require authentication, avoiding interruptions caused by session timeouts.

Controlling Request Rates

To avoid overwhelming servers or triggering anti-scraping measures, it's essential to control the rate of your requests. Here’s an example of a rate-limited scraper:

class RateLimitedScraper {

constructor(requestsPerMinute = 30) {

this.queue = [];

this.interval = (60 * 1000) / requestsPerMinute;

this.lastRequest = Date.now();

}

async addToQueue(url) {

const now = Date.now();

const timeSinceLastRequest = now - this.lastRequest;

if (timeSinceLastRequest < this.interval) {

await new Promise(resolve =>

setTimeout(resolve, this.interval - timeSinceLastRequest)

);

}

try {

const response = await axios.get(url);

this.lastRequest = Date.now();

return response.data;

} catch (error) {

console.error(`Error scraping ${url}: ${error.message}`);

throw error;

}

}

}

For large-scale projects, tools like the Apify SDK can handle thousands of concurrent requests while maintaining high success rates. Adding delays between requests not only reduces the risk of detection but also ensures you don’t overload the target server.

When implementing these techniques, always respect the website's terms of service and check its robots.txt file. Proper error handling is also important to ensure your scraper performs reliably and responsibly.

Ethical Web Scraping Practices

Respecting Website Policies

When scraping data, it's crucial to follow website rules and guidelines. Start by reviewing the robots.txt file. This file outlines which parts of the site can be accessed by automated tools and which are restricted. These rules help shape the technical measures you need to take, especially when dealing with sensitive data or planning request patterns.

Legal and Compliance Issues

Web scraping operates in a complex legal environment, especially after landmark cases like LinkedIn vs hiQ Labs in 2020, which influenced how courts interpret scraping activities. To stay compliant when using tools like JavaScript-based scrapers, make sure to:

- Anonymize personal data before storing it.

- Document your data handling practices transparently.

- Respect intellectual property laws, including copyright restrictions.

- Use tools like Cheerio or Puppeteer to filter out personal identifiers during scraping.

These steps help align your scraping activities with legal requirements and ethical standards.

Minimizing Impact on Websites

To avoid overloading servers, ethical scraping requires thoughtful practices. For instance, as discussed in earlier examples with rate-limiting techniques and Puppeteer, you can reduce server strain by:

- Adding progressive delays between requests (e.g., 2-5 seconds).

- Include a clear identifier in your scraper's request headers.

- Limiting your scraping to public, unprotected endpoints that don't require authentication.

These strategies help ensure stable website performance while collecting data responsibly. Combined with robust error handling and rate-limiting methods, they form a solid framework for ethical JavaScript-based web scraping.

Troubleshooting and Improving Your Web Scraper

Common Issues and Solutions

Scraping modern websites can be tricky due to measures like IP blocking, rate-limiting, and authentication requirements. Here's how to tackle some of the most frequent problems:

Blocked Requests: If your scraper gets blocked, try these steps:

- Use rotating proxies to spread requests across multiple IPs.

- Set realistic user-agent headers that mimic popular browsers.

Incorrect Data Extraction: Changes in website structure can affect your scraper's accuracy. To keep things running smoothly:

- Create flexible selectors that can adapt to minor HTML updates.

- Add error handling for missing elements and validate the data format after extraction.

Dynamic Content Loading: Many websites use dynamic elements, which can complicate scraping. Address this by:

- Handling AJAX requests directly.

- Simulating user interactions when necessary.

Debugging Web Scrapers

Debugging your scraper effectively requires a structured approach and the right tools. Here's how you can streamline the process:

Browser Developer Tools: Tools like Chrome DevTools, paired with Puppeteer, are excellent for inspecting DOM elements, tracking network activity, and analyzing JavaScript behavior.

Node.js Debugging: Use built-in features to identify and resolve issues:

-

Add detailed logs to the

debuglibrary. -

Use

console.logstrategically to trace problems. - Enable the Node.js inspector for deeper analysis when necessary.

Enhancing Scraper Performance

Optimizing your scraper's performance is essential for handling larger workloads and improving efficiency. Here's how you can achieve better results:

Request Optimization:

- Configure Axios for batch processing to send multiple requests efficiently.

- Use connection pooling to handle multiple connections at once.

- Cache responses where appropriate to reduce unnecessary server requests.

Scaling Solutions:

For large-scale scraping, combine Puppeteer clusters with cloud infrastructure. Ensure you respect rate limits to avoid getting blocked.

Conclusion and Next Steps

Tools and Techniques Overview

By 2025, JavaScript will offer a solid ecosystem for web scraping. Tools like Axios (for HTTP requests), Cheerio (for parsing), and Puppeteer (for browser automation) are widely used. For large-scale operations, the Apify SDK stands out as a go-to solution.

Putting These Tools to Use

JavaScript-based scraping solutions are now a key driver for making informed decisions across various industries. For example, as mentioned in Section 1.1, marketing teams can use these tools to gather competitive insights, such as analyzing customer feedback.

Retailers have also seen real savings. By applying Puppeteer techniques from Section 3.3, some have cut their price monitoring expenses by 40%. This highlights how modern web scraping can provide tangible benefits.

Staying Ahead in Web Scraping

AI Web scraping isn’t static. As websites enhance their defenses, staying compliant and efficient requires constant adjustments. Section 5.3's rate-limiting strategies, for example, show how performance tuning is essential for long-term success.

Here are three areas to prioritize:

- Keep JavaScript libraries up to date: Regular updates ensure compatibility and efficiency.

- Stay informed on data privacy laws: Regulations are always evolving.

- Fine-tune rate-limiting and scaling: These adjustments help maintain performance and avoid detection.

FAQs

Find answers to commonly asked questions about our Data as a Service solutions, ensuring clarity and understanding of our offerings.

We offer versatile delivery options including FTP, SFTP, AWS S3, Google Cloud Storage, email, Dropbox, and Google Drive. We accommodate data formats such as CSV, JSON, JSONLines, and XML, and are open to custom delivery or format discussions to align with your project needs.

We are equipped to extract a diverse range of data from any website, while strictly adhering to legal and ethical guidelines, including compliance with Terms and Conditions, privacy, and copyright laws. Our expert teams assess legal implications and ensure best practices in web scraping for each project.

Upon receiving your project request, our solution architects promptly engage in a discovery call to comprehend your specific needs, discussing the scope, scale, data transformation, and integrations required. A tailored solution is proposed post a thorough understanding, ensuring optimal results.

Yes, You can use AI to scrape websites. Webscraping HQ’s AI website technology can handle large amounts of data extraction and collection needs. Our AI scraping API allows user to scrape up to 50000 pages one by one.

We offer inclusive support addressing coverage issues, missed deliveries, and minor site modifications, with additional support available for significant changes necessitating comprehensive spider restructuring.

Absolutely, we offer service testing with sample data from previously scraped sources. For new sources, sample data is shared post-purchase, after the commencement of development.

We provide end-to-end solutions for web content extraction, delivering structured and accurate data efficiently. For those preferring a hands-on approach, we offer user-friendly tools for self-service data extraction.

Yes, Web scraping is detectable. One of the best ways to identify web scrapers is by examining their IP address and tracking how it's behaving.

Data extraction is crucial for leveraging the wealth of information on the web, enabling businesses to gain insights, monitor market trends, assess brand health, and maintain a competitive edge. It is invaluable in diverse applications including research, news monitoring, and contract tracking.

In retail and e-commerce, data extraction is instrumental for competitor price monitoring, allowing for automated, accurate, and efficient tracking of product prices across various platforms, aiding in strategic planning and decision-making.