- Harsh Maur

- December 20, 2024

- 7 Mins read

- Scraping

Extract Data from JavaScript Pages with Puppeteer

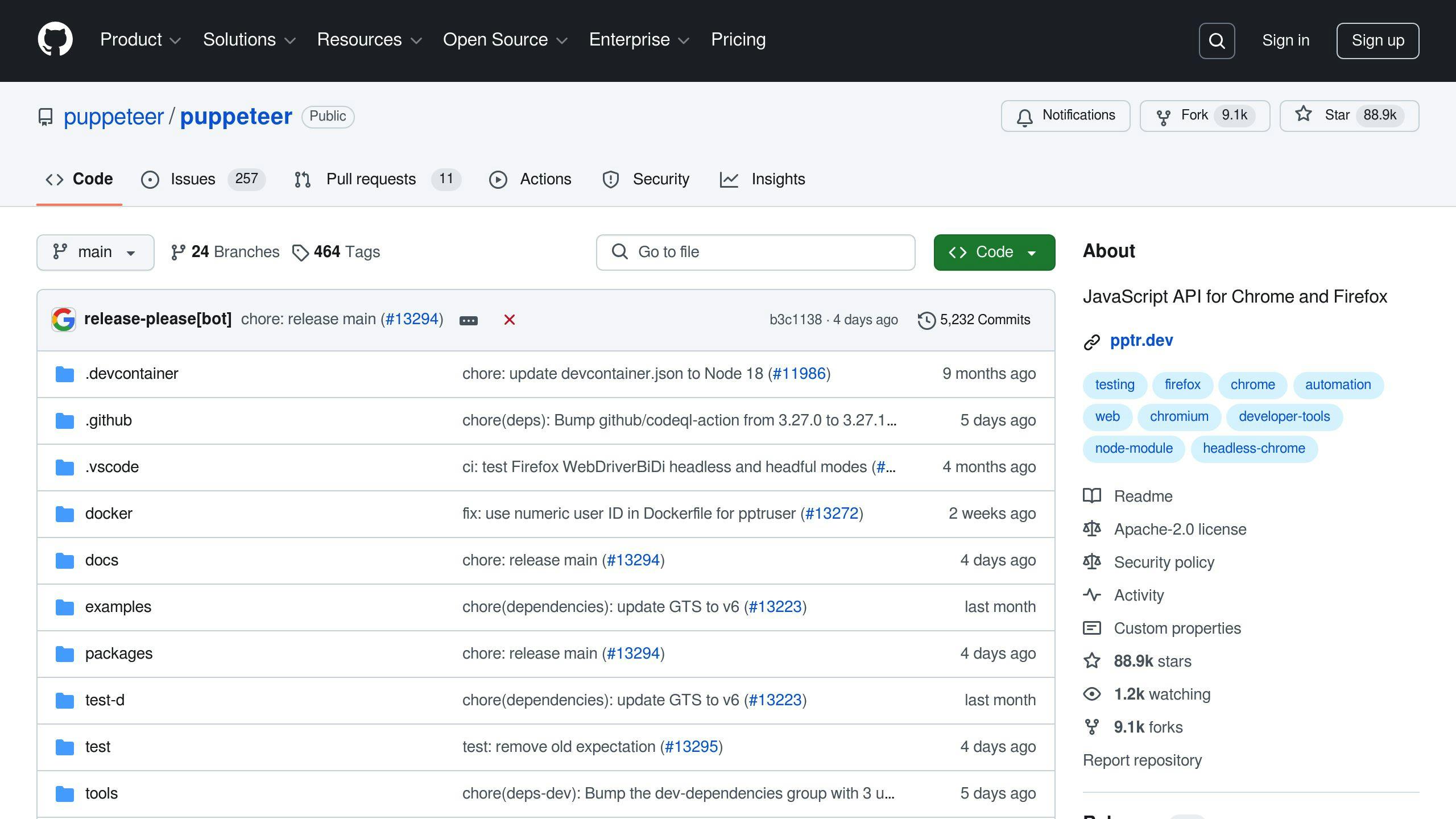

Want to scrape data from JavaScript-heavy websites? Puppeteer makes it easy. This Node.js library automates Chrome or Chromium, letting you extract dynamic content that traditional tools miss. Here's what you'll learn:

- Why Puppeteer is essential: It renders JavaScript, mimicking real browser behavior for reliable scraping.

-

How to get started: Install Puppeteer, set up a basic script, and handle dynamic content with

waitForSelector. - Advanced techniques: Extract data from iframes, manage pagination, and handle infinite scrolling effectively.

- Ethical scraping tips: Follow website rules, avoid server overload, and comply with privacy laws.

Whether you're scraping multi-page sites or dynamic content, this guide covers everything you need to know to use Puppeteer responsibly and efficiently.

Getting Started with Puppeteer

How to Install Puppeteer

Before you start using Puppeteer for web scraping, make sure you have Node.js installed. To install Puppeteer, use the following npm command:

npm install puppeteer

This will install Puppeteer along with a compatible version of Chromium.

Basic Puppeteer Setup

Here’s a simple script to help you get started with scraping JavaScript-rendered pages:

const puppeteer = require('puppeteer');

async function startScraping() {

const browser = await puppeteer.launch({

headless: true,

defaultViewport: { width: 1920, height: 1080 }

});

const page = await browser.newPage();

await page.setUserAgent('Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36');

try {

await page.goto('https://example.com', {

waitUntil: 'networkidle2'

});

// Your scraping code goes here

} catch (error) {

console.error('Navigation failed:', error);

}

}

startScraping();

This script sets up the basics for scraping JavaScript-heavy pages. Key configurations include:

-

headless: true: Runs Chrome in the background without a visible UI, improving speed. -

defaultViewport: Simulates a screen size for a desktop browser. -

setUserAgent: Reduces the chances of detection by mimicking a real browser. -

waitUntil: 'networkidle2': Ensures the page is fully loaded by waiting until there are no more than two active network requests.

To debug or watch the browser in action, set headless: false.

Handling Dynamic Content

When dealing with dynamic content, it’s essential to wait for specific elements to load before extracting data. Here’s an example:

// Wait for a specific element to appear

await page.waitForSelector('.content-class', {

timeout: 5000,

visible: true

});

// Extract data after the element is loaded

const data = await page.evaluate(() => {

const element = document.querySelector('.content-class');

return element ? element.textContent : null;

});

The waitForSelector method ensures the element is present and visible before proceeding. This prevents errors caused by incomplete page rendering. Always wrap critical operations in try-catch blocks and set timeouts to handle slow-loading pages or unexpected issues.

Finally, don’t forget to close the browser:

await browser.close();

Closing the browser ensures resources are freed up and prevents memory leaks.

With this setup, you’re ready to start navigating web pages and collecting the data you need.

Using Puppeteer to Extract Data

Navigating Web Pages with Puppeteer

Navigating web pages with Puppeteer involves managing page loads and dynamic elements effectively:

const page = await browser.newPage();

try {

await page.goto('https://example.com', {

waitUntil: 'networkidle2',

timeout: 30000

});

} catch (error) {

console.error('Navigation failed:', error);

}

To lower the chances of detection during scraping, you can take a few steps:

// Simulate human-like behavior with a user agent string

await page.setUserAgent('Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36');

// Add random delays between actions

await page.waitForTimeout(Math.random() * 1000 + 2000);

// Monitor browser console output for debugging

page.on('console', console.log);

Once you're on the target page, you can proceed to extract the data you need from its dynamic content.

Extracting Data from Dynamic Pages

For JavaScript-heavy pages, these techniques can help you extract data effectively:

// Extract data from a single element

const title = await page.$eval('h1', element => element.textContent);

// Extract data from multiple elements

const links = await page.$$eval('a', elements =>

elements.map(element => ({

text: element.textContent,

href: element.href

}))

);

If the page loads more content as you scroll, use this method to capture everything:

// Handle infinite scrolling

async function scrapeInfiniteScroll() {

let previousHeight = 0;

while (true) {

const currentHeight = await page.evaluate('document.body.scrollHeight');

if (currentHeight === previousHeight) break;

await page.evaluate('window.scrollTo(0, document.body.scrollHeight)');

await page.waitForTimeout(2000);

previousHeight = currentHeight;

}

}

For dynamic content that appears after a delay, use waitForFunction:

await page.waitForFunction(

() => document.querySelector('#dynamic-content') !== null,

{ timeout: 5000 }

);

const dynamicData = await page.$eval('#dynamic-content',

element => element.textContent

);

When dealing with iframes, locate the specific frame and extract the data you need:

const frame = page.frames().find(frame => frame.name() === 'contentFrame');

if (frame) {

const frameData = await frame.$eval('.content',

element => element.textContent

);

}

These strategies are key for scraping modern web applications that rely on JavaScript to display content.

Advanced Puppeteer Techniques

Scraping Data Across Multiple Pages

When scraping data across several pages, you can loop through each page while checking for the presence of a 'next' button. Here's a practical example:

async function scrapeMultiplePages(startUrl, maxPages = 10) {

const results = [];

let currentPage = 1;

let hasNextPage = true;

while (hasNextPage && currentPage <= maxPages) {

try {

await page.goto(`${startUrl}?page=${currentPage}`, {

waitUntil: 'networkidle2'

});

const pageData = await page.evaluate(() => {

const items = document.querySelectorAll('.item');

return Array.from(items).map(item => ({

title: item.querySelector('.title')?.textContent,

price: item.querySelector('.price')?.textContent,

description: item.querySelector('.description')?.textContent

}));

});

results.push(...pageData);

hasNextPage = await page.evaluate(() => {

const nextButton = document.querySelector('.pagination .next:not(.disabled)');

return !!nextButton;

});

currentPage++;

await page.waitForTimeout(Math.random() * 2000 + 1000);

} catch (error) {

console.error(`Error on page ${currentPage}:`, error);

break;

}

}

return results;

}

For pages with infinite scroll, you can use the method outlined in the "Extracting Data from Dynamic Pages" section to handle continuous content loading. After managing multi-page navigation, you may encounter embedded content inside iframes, which requires additional handling.

Working with IFrames and Exporting Data

To extract data from iframes, you'll need to access the iframe's content independently from the main page. Here's how:

async function extractIframeContent(frameSelector) {

const frame = page.frames().find(f => {

return f.url().includes(frameSelector);

});

if (!frame) {

throw new Error('Frame not found');

}

await frame.waitForSelector('.content-selector');

return frame.$eval('.content-selector',

element => element.textContent

);

}

Puppeteer also allows you to create PDFs with customizable settings:

async function exportToPDF(outputPath) {

await page.pdf({

path: outputPath,

format: 'A4',

margin: {

top: '20px',

right: '20px',

bottom: '20px',

left: '20px'

},

printBackground: true,

displayHeaderFooter: true

});

}

If you're dealing with large-scale scraping projects that involve multi-page navigation or iframe content, managed services like Webscraping HQ can simplify the process. Their platform supports tasks like pagination, proxy rotation, and data formatting while adhering to website terms of service:

"When dealing with complex multi-page scraping tasks, managed services can handle the heavy lifting of pagination, proxy rotation, and data formatting while ensuring compliance with website terms of service."

sbb-itb-65bdb53

Tips for Effective Web Scraping

Legal and Ethical Web Scraping

Scraping data isn't just about technical know-how; it's also essential to follow ethical guidelines and comply with legal regulations. Start by checking the website's robots.txt file and terms of service. This file usually outlines which parts of the site are accessible to automated tools.

If you're using Puppeteer for scraping, make sure to take ethical steps like these:

const browser = await puppeteer.launch({

headless: true,

args: ['--no-sandbox'],

userDataDir: './user-data'

});

When dealing with sensitive information, ensure compliance with privacy laws like GDPR and CCPA. This means using encryption, storing data securely, and keeping detailed logs to maintain accountability. Adding rate limiting is another responsible practice - it prevents overloading servers and shows respect for the website's resources.

Using Managed Web Scraping Services

For large-scale scraping tasks involving JavaScript-heavy websites, managed services like Webscraping HQ can be a game-changer. These platforms handle the heavy lifting with tools and features that simplify the process.

Key features include:

- Handling dynamic content loading across multiple pages

- Managing anti-bot protections like CAPTCHAs

- Delivering structured data in your preferred format

- Monitoring websites for updates regularly

Managed services streamline the process, making it easier to focus on extracting the data you need without getting bogged down in technical challenges.

Web Scraping with Puppeteer & Node.js: Chrome Automation

Conclusion

Puppeteer gives you precise control over Chrome and Chromium browsers, making it a great tool for gathering data from JavaScript-heavy pages. However, using it responsibly is crucial for maintaining ethical and effective web scraping practices.

With methods like page.waitForSelector() and page.waitForNavigation(), you can reliably extract data even from complex websites. Puppeteer’s API also supports advanced techniques while allowing you to implement practices like rate limiting to avoid overloading servers.

By combining the tips in this guide with a thoughtful approach, you can make your data extraction process more efficient. If scalability and ease of use are priorities, managed services can complement your efforts.

Whether you decide to dive into Puppeteer or use managed solutions, focus on scraping responsibly and staying updated on best practices as web technologies evolve.

FAQs

How do you make Puppeteer wait for a page to load?

Puppeteer offers waitForNavigation and waitForSelector to ensure pages and elements are fully loaded before proceeding. Use waitForNavigation for overall page loads and waitForSelector for targeting specific elements.

// Wait for the page to finish loading

await page.waitForNavigation({

waitUntil: 'networkidle2',

timeout: 10000

});

// Wait for a specific element to appear

await page.waitForSelector('#targetElement');

| Method | Best Use Case | Code Example |

|---|---|---|

networkidle2 |

General page loading | waitUntil: 'networkidle2' |

load |

Full page load | waitUntil: 'load' |

domcontentloaded |

HTML content loaded | waitUntil: 'domcontentloaded' |

waitForSelector |

Specific element load | waitForSelector('#element') |

For error handling, wrap your waiting logic in a try-catch block:

try {

await page.waitForNavigation({ waitUntil: 'networkidle2' });

} catch (error) {

console.error(error);

}

"Using

waitForSelectorinstead ofwaitForNavigationwhen waiting for specific elements can improve performance by reducing unnecessary waits" [1]

Mastering these waiting techniques ensures complete and accurate data capture, even on dynamic, JavaScript-heavy websites.

FAQs

Find answers to commonly asked questions about our Data as a Service solutions, ensuring clarity and understanding of our offerings.

We offer versatile delivery options including FTP, SFTP, AWS S3, Google Cloud Storage, email, Dropbox, and Google Drive. We accommodate data formats such as CSV, JSON, JSONLines, and XML, and are open to custom delivery or format discussions to align with your project needs.

We are equipped to extract a diverse range of data from any website, while strictly adhering to legal and ethical guidelines, including compliance with Terms and Conditions, privacy, and copyright laws. Our expert teams assess legal implications and ensure best practices in web scraping for each project.

Upon receiving your project request, our solution architects promptly engage in a discovery call to comprehend your specific needs, discussing the scope, scale, data transformation, and integrations required. A tailored solution is proposed post a thorough understanding, ensuring optimal results.

Yes, You can use AI to scrape websites. Webscraping HQ’s AI website technology can handle large amounts of data extraction and collection needs. Our AI scraping API allows user to scrape up to 50000 pages one by one.

We offer inclusive support addressing coverage issues, missed deliveries, and minor site modifications, with additional support available for significant changes necessitating comprehensive spider restructuring.

Absolutely, we offer service testing with sample data from previously scraped sources. For new sources, sample data is shared post-purchase, after the commencement of development.

We provide end-to-end solutions for web content extraction, delivering structured and accurate data efficiently. For those preferring a hands-on approach, we offer user-friendly tools for self-service data extraction.

Yes, Web scraping is detectable. One of the best ways to identify web scrapers is by examining their IP address and tracking how it's behaving.

Data extraction is crucial for leveraging the wealth of information on the web, enabling businesses to gain insights, monitor market trends, assess brand health, and maintain a competitive edge. It is invaluable in diverse applications including research, news monitoring, and contract tracking.

In retail and e-commerce, data extraction is instrumental for competitor price monitoring, allowing for automated, accurate, and efficient tracking of product prices across various platforms, aiding in strategic planning and decision-making.